Creating AI self-portraits

Historically our only image creating entities have been human artists, and their efforts have contributed greatly to our cultural evolution. We now find ourselves at an inflection point where generative AIs such as DALL-E, Midjourney, Stable Diffusion, etc. can also be meaningfully considered as image creating entities, and we are just beginning to reckon with their contributions to cultural evolution.

One activity common to human artists for centuries is a predilection to create self-portraits, which can be usefully considered as a recursive image-generating activity. As part of my exploration of the creative potentials of generative AIs I decided to engage deep neural networks in such activities of recursive self-representation, to see what the results might show.

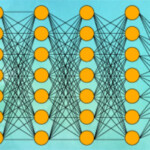

For a bit of background, deep neural networks such as DALL-E work by coding visual information in “neurons” that are loosely based on actual physiological neurons. These neurons are arranged in layers, where the first layers will be generalized, selecting for such image qualities as lines, edges, colors, etc. As we go deeper into the network, neuron layers become more specialized—until eventually an output is specialized enough that it become a recognizable image. Generation of particular images is accomplished by altering the weights of the connections between the neurons as we move through the network.

The first illustration below is a schematic diagram of a basic neural network, showing neurons arranged in layers and linked to each other. In practice a deep neural network would contain considerably more layers (thus “deep”). The second illustration shows a human-readable image visualization view of transformations as processing moves from one layer to another. The third illustration shows an image visualization view of a few individual neurons on a layer, and the final image shows how the neurons become more specialized deeper in the network. Click to enlarge.

The illustrations above show in a sense how a neural network “looks” in image visualization mode, and they provide background to help interpret my self-portrait experiments.

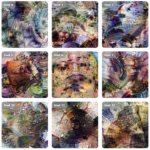

The next set of illustrations comprises images created from prompts for the AI to generate images of a neural network generating images. I consider this to be an operative, recursive definition of a self portrait for such as system, and the correspondences are obvious with the neuron images seen above. Here DALL-E has generated some system-level interpretations of its own internal configurations. Please note that all my DALL-E images are first generation and are not subjected to editing or variations, unless so noted.

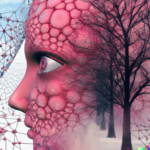

Having achieved some kind of baseline imagery of the actual structure of the network, I wanted to add a symbolic layer by including references to the AI’s “self” in the prompts. As expected, DALL-E’s training data contained a lot of human images tagged with “self”, creating a tendency for any request for self-representation to trend toward human forms, if that word appears in the prompt. Anthropomorphizing the process—as we are wont to do with AIs—I consider this a more metaphorical interpretation of AI self, whereas the grid images above seem more literal.

The next set of illustrations shows image generations from prompts referring in some way to the AI”s sense of self. The tendency toward humanoid representation is obvious and quite different from the grids.

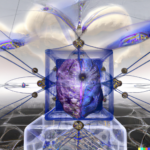

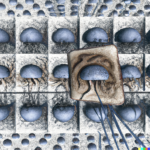

It turns out that not all prompts create either neuron-like grid representations or humanoid likenesses; sometimes the AI blends the concepts in its representations and I find such mixed generations to embody some of the creative and synthetic qualities I am seeking in generative neural networks.

The next set of illustrations shows image generations from prompts referring in some way to the AI”s sense of self, but notably they show both grid-like and humanoid-like characteristics, blended in various ways. This very variety of representation I take as a gauge for AI creativity.

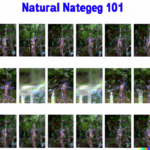

Over the course of many generations, derived from many prompt variations, I have seen the AI represent itself in many different configurations. Below is a sampling of a few more: